Tutorial 2: Non-Gaussian Measurements#

API Version: Python NavAbilitySDK.py

YouTube Walk Through Video#

A walk through of this video has been prepared and can be found at:

Applications with Ambiguous Data#

There are many, many ways in which non-Gaussian / ambiguous measurements occur, this tutorial will simply pick one common example to illustrate the basic concepts. In short, measurements do not have to follow a unimodal bell curve (i.e. normal/Gaussian) but can instead introduce non-Gaussian beliefs.

Localizing ground robots suffering wheel slip or getting stuck (rotary encoders),

Fusing ambiguous RADAR data into navigation for driving autonomy,

Mapping underwater structures from ambiguous SONAR data,

Overcoming gearbox backlash in kinematic robot arms / legs,

Navigating ground / marine surface vehicles through GNSS, radio, laser ranging signal multipath situations (e.g. - urban canyon),

And many more.

A Basic 1D Example#

There are many, many ways in which non-Gaussian / ambiguous measurements occur, this tutorial will simply pick one common example to illustrate the basic concepts. In short, measurements do not have to follow a unimodal bell curve (i.e. normal/Gaussian) but can instead introduce non-Gaussian beliefs.

We ground the tutorial in an example: imagine a wheeled robot travelling along in a straight line, using wheel encoders to estimate the distance travelled. There is, however, a quirk, from time to time the robot gets stuck on something and the wheels spin for a few rotations before progress continues.

We assume that our software is able to detect when the wheels are slipping, but by how much is unclear. We take it that when slip is detected, the distance of slip measurement is only 40% accurate. In this tutorial, we will build a basic robot localization process that can handle this “bad” measurement data.

To help familiarize yourself with multi-modal beliefs, we limit this tutorial to a one dimensional example with only a few variables and factors. This tutorial introduces non-Gaussian behavior through non-Gaussian measurements in the factor graph. At the end of the page, we link to an example that is 2D, real-world, and has non-Gaussian odometry.

This example shows one of four mechanisms by which non-Gaussian behavior can be introduced into a factor graph solution, see other tutorials for other mechanisms. The ambiguous measurement example shown in this tutorial can readily be incorporated in other use cases.

The tutorial shows how multi-modal uncertainty can be introduced from non-Gaussian measurements, and how that is transmitted through the system. We will build a factor graph that contains multi-modal belief as well as Uniform and Rayleigh distributions. We can construct factor graph of heterogeneous data and let the joint inference find the best marginal posterior estimates on each of the desired variables in the factor graph.

Signatures Used#

ContinuousScalar, Prior, LinearRelative, Mixture, Normal

Ambiguous Data Example#

The first step is to load the NavAbilitySDK functionality and a few other library features,

ICRA Survey#

Thanks for doing our tutorials! We really want to make sure this is valuable for you.

This quick 2 minute survey will help us improve them for next year’s workshop.

# # ONLY IF REQUIRED, Install a pip package in the current Jupyter kernel

# import sys

# !{sys.executable} -m pip install navabilitysdk

from navability.entities import *

from navability.services import *

from uuid import uuid4

import asyncio

Starting a 1D Factor Graph#

Variable, ContinuousScalar#

The first thing to do is setup a client-context to talk with the NavAbility Platform.

The next step is to describe the inference problem with a graphical model by populating the factor graph with variable nodes.

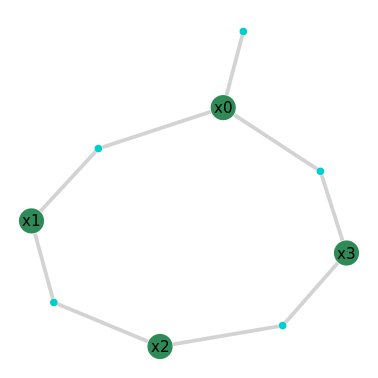

The variable nodes are identified by Strings, namely x0, x1, x2, x3.

navability_client = NavAbilityHttpsClient()

# you need a unique userId:robotId, and can keep using that across all tutorials

userId = "guest@navability.io"

robotId = "SDKpy_"+(str(uuid4())[0:3])

sessionId = "Tutorial2_"+(str(uuid4())[0:3])

client = Client(userId, robotId, sessionId)

# add the first node

result_id = await addVariable(navability_client, client, "x0", VariableType.ContinuousScalar)

print(f"Added x0 with result ID {result_id}")

# Wait for it to be loaded.

await waitForCompletion(navability_client, [result_id])

Prior Factor, (Euclidean(1))#

Factor graphs are bipartite graphs with factors that act as mathematical structure between interacting variables.

After adding node x0, a singleton factor of type Prior (which was defined by the user earlier) is ‘connected to’ variable node x0.

This unary factor is taken as a Normal distribution with zero mean and a standard deviation of 1.

# this is unary (prior) factor and does not immediately trigger autoinit of :x0.

# REF: addFactor!(fg, [:x0], Prior(Normal(0,1)))

result_id = await addFactor(navability_client, client, ["x0"], Prior(Normal(0, 1)))

Note, this example is using just Prior, which is a simplification for the sake of this tutorial. Look out for on-manifold prior factors in other code examples.

Visualizing Graph Topology#

Visualization for the SDK interfaces is provided by the NavAbility WebApp, click the generated graphics to open the link:

# click the generated graphic to link to the NavAbiltiy WebApp visualization

GraphVizApp(client, variableStartsWith="")

The topological graph plot obtained from the NavAbility App above shows the two node factor graph, one variable and one prior factor. This example uses graph-based automatic variable initialization which was discussed in ICRA 2022 Tutorial 1.

Next Variable and Relative Factor#

Now let’s add a second variable x1, and connect it to x0 with a LinearRelative factor.

# add x1

result_id = await addVariable(navability_client, client, "x1", VariableType.ContinuousScalar)

print(f"Added x1 with result ID {result_id}")

await getStatusLatest(navability_client, result_id)

# P(Z | :x1 - :x0 ) where Z ~ Normal(10,1)

result_id = await addFactor(navability_client, client, ["x0", "x1"], LinearRelative(Normal(10, 0.1)))

Visualizing the Variable Probability Belief#

The NavAbility WebApp allows visualization (plotting) of the belief state over any of the variable nodes. Remember the first time executions are slow given the required code compilation, and that future versions of these packages will use more precompilation to reduce first execution running cost.

We first need to trigger a solve for the web application to update its belief estimates. We first make sure that the all tasks have completed, and then trigger a new solve.

# wait for graph modifications to complete

await waitForCompletion(navability_client, [result_id])

# trigger a new solve

solve_request = await solveSession(navability_client, client)

print('running solve...')

Once the solve completes, we can plot the results using the NavAbility WebApp

# wait for the solve to complete

await waitForCompletion(navability_client, [solve_request], maxSeconds=180)

# click the generated graphic to link to the NavAbiltiy WebApp visualization

MapVizApp(client)

TIP, click the “SHOW DISTRIBUTIONS” button in the NavAbility App geometric visualization page to see the belief estimates of solved variables. You can also “HIDE PPES” parametric point estimates.

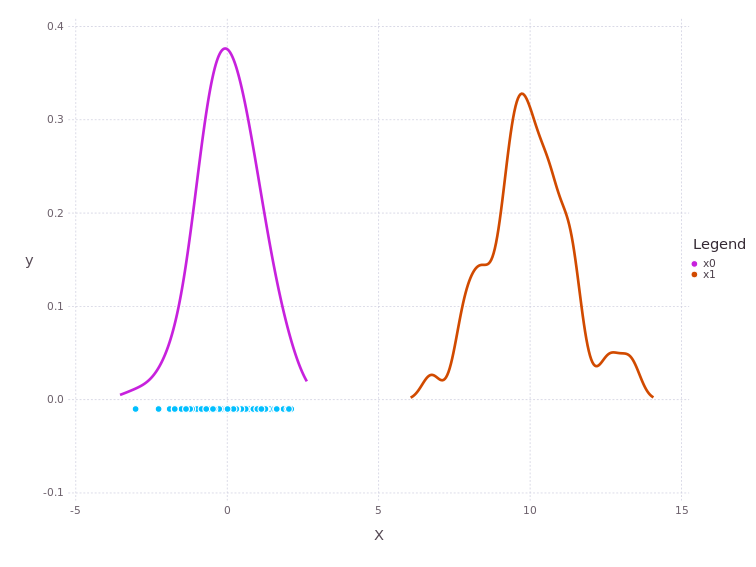

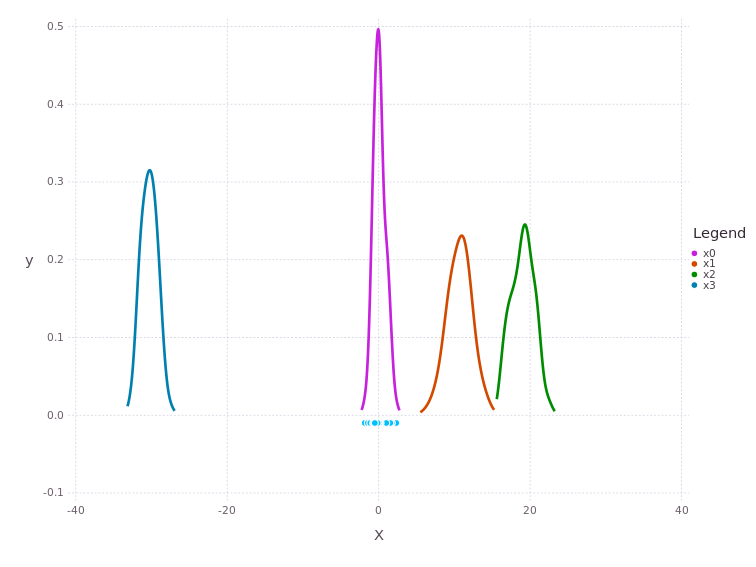

The predicted influence of the P(Z| X1 - X0) = LinearRelative(Normal(10, 1)) is shown by the x1 trace.

The predicted belief of x1 is nothing more than the approximated convolution of the current marginal belief of x0 with the conditional belief described by P(Z | X1 - X0).

Non-Gaussian Measurement to x2#

Non-parametric Measurements#

Important Side Note: Thus far, this tutorial has used parametric noise models, but the solver can equally consume nonparametric measurements in factors. These include kernel density estimates, intensity (heat) maps, and more. To simplify introduction of non-Gaussian noise models, this tutorial will include the simplest form, namely mixture distributions. A link to a fully non-parametric marine radar example is provided at the end of this tutorial.

Mixture Distribution on Next Relative Factor (Wheel Slip)#

As the robot continues to drive from x1 to x2, the robot software detects wheel slip has occurred. Here then is the critical point, how should the next odometry measurement incorporate this ambiguous information. First, we add the next ContinuousScalar variable x2 as before.

result_id = await addVariable(navability_client, client, "x2", VariableType.ContinuousScalar)

print(f"Added x2 with result ID {result_id}")

This time, however, the odometry factor from x1 uses a more complicated Mixture likelihood function. Given a 40% accuracy in wheel slip detection, we are going to construct a Mixture distribution as the measurement, where 40% weight goes to what we think the distance travelled is while the remaining 60% weight is designated to ‘faulty’ odometry measurement.

Taking the full encoder turns as 60 units distance, with slip being detected on and off throughout, we estimate the majority slip case as a Rayleigh distribution from 0. Since we know very little about the non-slip case, let’s take the robot distance traveled as uniformly distributed somewhere between 40 up to 60 units.

await addFactor(navability_client, client,

["x1", "x2"],

Mixture(LinearRelative,

{

"slip": Rayleigh(5),

"noslip": Uniform(35,60)

},

[0.4, 0.6],

2))

# let's wait to make sure all nodes were added

await waitForCompletion(navability_client, [result_id])

The mmo variable illustrates how a near arbitrary mixture probability distribution can be used as a conditional relationship between variable nodes in the factor graph.

In this case, a 40%/60% balance of a Rayleigh and truncated Uniform distribution which acts as a multi-modal conditional belief.

Interpret carefully what a conditional belief of this nature actually means.

This multi-modal belief implies that moving from one of the probable locations in x1 to a location in x2 by some processes defined by mmo=P(Z | X2, X1) is uncertain to the same 40%/60% ratio.

In practical terms, collapsing (through observation of an event) the probabilistic likelihoods of the transition from x1 to x2 may result in the x2 location being at either 15-20, or 40-65-ish units.

The predicted belief over x2 is illustrated by plotting the predicted belief after triggering a new solve.

solve_request = await solveSession(navability_client, client)

print('running solve...')

The results visualization updates automatically as the solver finishes. Here is the link to the Geometric map visualizations in th App again:

# wait until solve completes

await waitForCompletion(navability_client, [solve_request], maxSeconds=180)

# click the generated graphic to link to the NavAbiltiy WebApp visualization

MapVizApp(client)

Use the SHOW DISTRIBUTIONS button in the App visualization to see the underlying variable belief distribution estimates.

Each Mode Mean Slip or No-Slip#

Notice the multi-modal belief in the marginal posterior belief of x2. Also note that Kernel Density Estimate methods can appear to produce noisy belief density estimates. While this does sometimes occur, it is harmless and has no impact on the accuracy of the mean point estimate produced for each mode in the associated belief.

The x2 mode near 20 is the SLIP-MODE case, and the mode near 60 is the NO-SLIP-MODE.

Adding Variable x3#

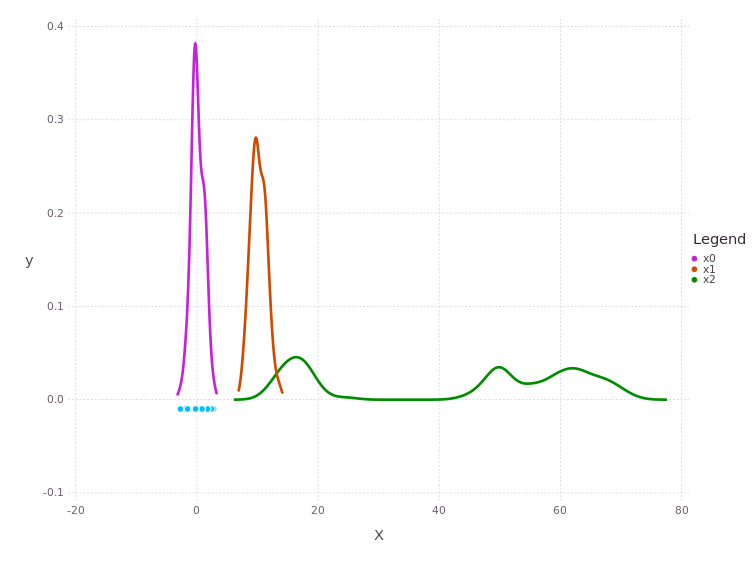

In suspecting that the robot did get stuck and had its wheels slip, we reverse the robot 50 units and add pose variable x3, i.e. a factor measurement LinearRelative(Normal(-50,1))

result_id = await addVariable(navability_client, client, "x3", VariableType.ContinuousScalar)

print(f"Added x3 with result ID {result_id}")

result_id = await addFactor(navability_client, client, ["x2", "x3"], LinearRelative(Normal(-50, 1)))

# let's wait to make sure all nodes were added

await waitForCompletion(navability_client, [result_id])

expands the factor graph to four variables and four factors.

This part of the tutorial shows how a unimodal likelihood (conditional belief) can transmit the bimodal belief currently contained in x2. By solving the graph, we get numerical estimates for the variables:

solve_request = await solveSession(navability_client, client)

print('running solve...')

and plotting the marginal posterior belief estimates over each variable to see what the position estimates are given available info:

# wait until solve completes

await waitForCompletion(navability_client, [solve_request], maxSeconds=180)

# click the generated graphic to link to the NavAbiltiy WebApp visualization

MapVizApp(client)

Notice the x3 trace is a shifted and slightly spread out version of the belief on x2, through the convolution with the conditional belief P(Z | X2, X3).

The Last Factor#

Only by introducing more information into the factor graph can inference extract more precise marginal belief estimates for each of the variables.

A final piece of information added to this graph is a factor directly relating x3 with x0.

result_id = await addFactor(navability_client, client, ["x3", "x0"], LinearRelative(Normal(30, 1)))

# let's wait to make sure all nodes were added

await waitForCompletion(navability_client, [result_id])

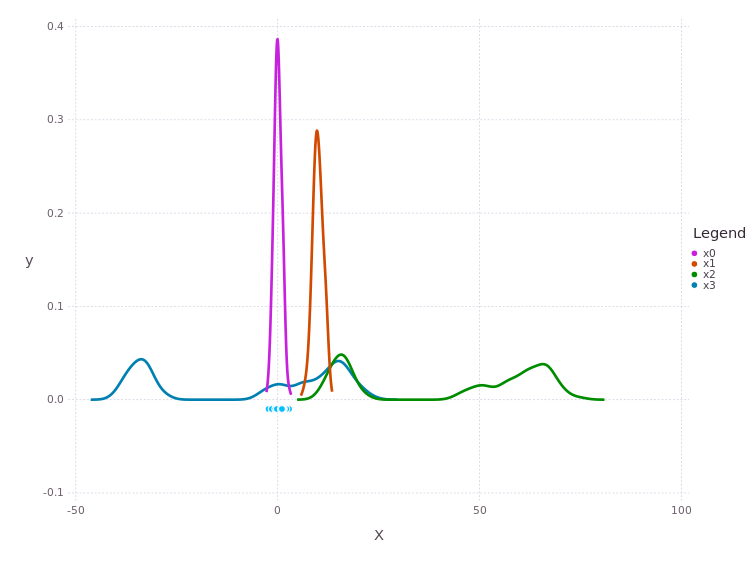

Pay close attention to what this last factor means in terms of the probability density traces shown in the previous figure.

The trace for x3 has two major modes, one that overlaps with x0, x1 near 0 and a second mode further to the left at -40.

The last factor introduces a shift LinearRelative(Normal(40,1)) which essentially aligns the left most mode of x3 back onto x0.

Solve the Graph#

Global inference over the full graph can now be triggered.

Again, we use solveSession to trigger the final solve in the NavAbility platform.

solve_request = await solveSession(navability_client, client)

print('running solve...')

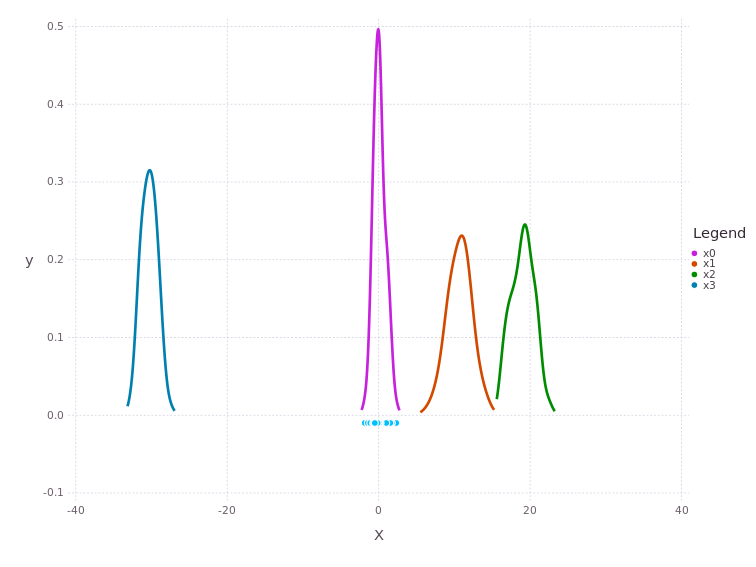

This last factor forces a mode selection through consensus.

By doing global inference, the new information obtained in x3 will be equally propagated to x2 where only one of the two modes will remain. Let’s look at the factor graph again:

# click the generated graphic to link to the NavAbiltiy WebApp visualization

GraphVizApp(client)

# wait until solve completes

await waitForCompletion(navability_client, [solve_request], maxSeconds=180)

# click the generated graphic to link to the NavAbiltiy WebApp visualization

MapVizApp(client)

Re-solve to ensure Stable Solution#

Resolving will demonstrate a stable solution.

solve_request = await solveSession(navability_client, client)

print('running solve...')

# wait until solve completes

await waitForCompletion(navability_client, [solve_request], maxSeconds=180)

# click the generated graphic to link to the NavAbiltiy WebApp visualization

MapVizApp(client)

The resulting posterior marginal beliefs over all the system variables are:

variables = await ls(navability_client, client)

print(f"Variables in graph: {variables}")

ppes = {

v: (await getVariable(navability_client, client, v)).ppes["default"].suggested

for v in variables

}

print(ppes)

The Wheels Did Slip!#

Look, the resulting distance from x1 to x2 with all the data available turns out to be 10 units. This is much less than the 60 units measured by the encoder. This means the SLIP-MODE case survived, and no-slip case disappeard.

This example here is constructed in such a way that when all data is considered together a clear answer can be extracted. In real situations, the beliefs could be more nuanced – which is all the more reason to consider non-Gaussian estimation which permanently keeps track of the nuanced features hidden in the data!

Conclusion#

It is important to note that although this tutorial ends with all marginal beliefs having near Gaussian shape and are unimodal, that the package supports multi-modal belief estimates during both the prediction and global inference processes.

Next Steps#

The next tutorial looks at weakly observable (a.k.a. missing / insufficient data, or underdetermined) situations where all Gaussian measurements result in highly non-Gaussian and multi-modal posterior estimates.